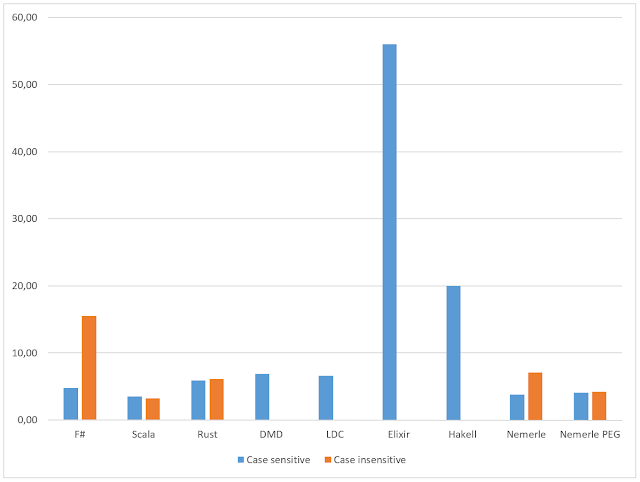

Load balancing: Rancher vs Swarm

Rancher has a load balancer built it (HAProxy). Let's compare its performance vs Docker Swarm one. I will use 3 identical nodes:

Much better! Swarm LB does introduce some little overhead, but it's totally tolerable. What's more, if I run the benchmark against the node where single container is running, Swarm LB shows exactly same performance as directly exposed web server (22400 req/sec in my case).

To make this blog post less boring, I added a nice picture :)

- 192GB RAM

- 28-cores i5 Xeon

- 1GBit LAN

- CentOS 7

- Docker 1.12

- Rancher 1.1.2

I will benchmark against a hello world HTTP server written in Scala with Akka-HTTP and Spray JSON serialization (I don't think it matters though), sources are on GitHub. I will use Apache AB benchmark tool.

As a baseline, I exposed the web server port outside the container and run the following command:

ab -n 100000 -c 20 -k http://1.1.1.1:29001/person/kot

It shows 22400 requests per second. I'm not sure whether it's a great result for Akka-HTTP, considering that some services written in C can handle hundreds of thousands requests per second, but it's not the main topic of this blog post (I ran the test with 100 concurrent connections (-c 100), and it shows ~50k req/sec. I don't know if this number is good enough either :) )

Now I created a Rancher so-called "stack" containing our web server and a build in load balancer:

Now run the same benchmark against the load balancer, increasing number of akka-http containers one-by-one:

| Containers | Req/sec |

| 1 | 755 |

| 2 | 1490 |

| 3 | 2200 |

| 4 | 3110 |

| 5 | 3990 |

| 6 | 4560 |

| 7 | 4745 |

| 8 | 4828 |

OK, it looks like HAProxy introduced a lot of overhead. Let's look how well Swarm internal load balancer handles such load. After initializing Swarm on all nodes, create a service:

docker service create --name akka-http --publish 32020:29001/tcp private-repository:5000/akkahttp1:1.11

Check that the container is running:

$ docker service ps akka-http

0yb99vo9btmx3t1wluvd0fgo6 akka-http.1 1.1.1.1:5000/akkahttp1:1.11 xxxx Running Running 3 minutes ago

OK, great. Now I will scale our service up one container at a time, running the same benchmark as I go:

docker service scale akka-http=2

docker service scale akka-http=3

...

docker service scale akka-http=8

Results:

| Containers | Req/sec |

| 1 | 19200 |

| 2 | 19700 |

| 3 | 18700 |

| 4 | 18700 |

| 5 | 18300 |

| 6 | 18800 |

| 7 | 17900 |

| 8 | 18300 |

Much better! Swarm LB does introduce some little overhead, but it's totally tolerable. What's more, if I run the benchmark against the node where single container is running, Swarm LB shows exactly same performance as directly exposed web server (22400 req/sec in my case).

To make this blog post less boring, I added a nice picture :)

I run JMeter (8 parallel threads). Direct: 6700, Swarm: 6500 (1 container) - 5600 (8 containers), Rancher: 835 r/s (1 container) - 2400 (8 containers). Which is roughly the same as AB results.

Comments